Intelligent Social Systems

Understanding the Application of Utility Theory in Robotics and Artificial Intelligence

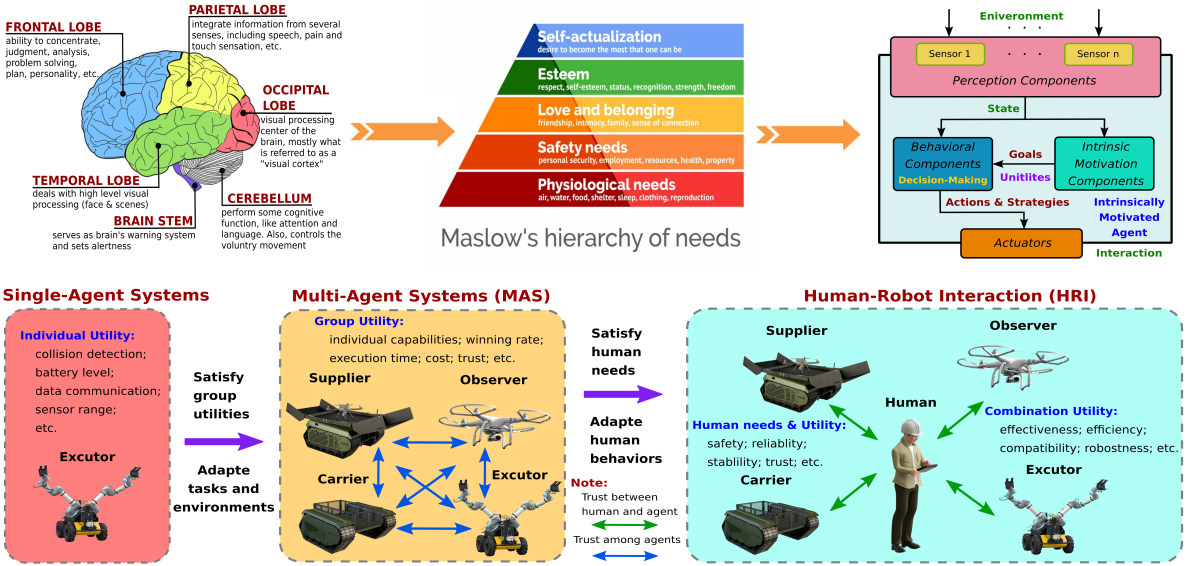

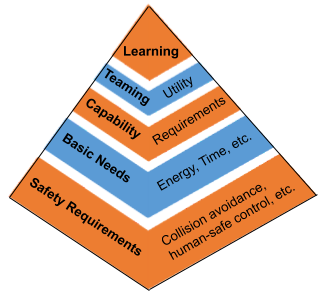

As a unifying concept in economics, game theory, and operations research, even in the Robotics and AI field, the utility is used to evaluate the level of individual needs, preferences, and interests. This research introduces a utility-orient needs paradigm to describe and evaluate inter and outer relationships among agents' interactions. Then, we survey existing literature in relevant fields to support it and propose several promising research directions along with some open problems deemed necessary for further investigations.

Agent Learning

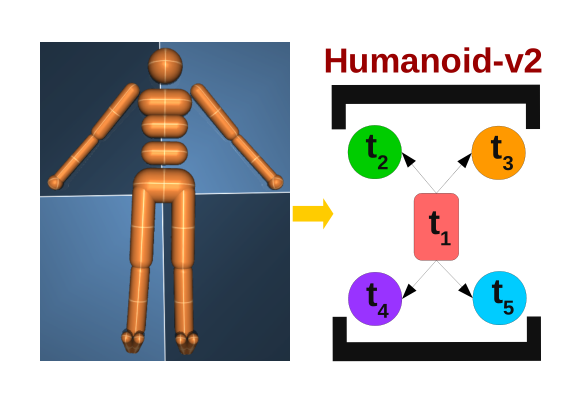

Bayesian Strategy Network (BSN) based Soft Actor Critic in Deep Reinforcement Learning

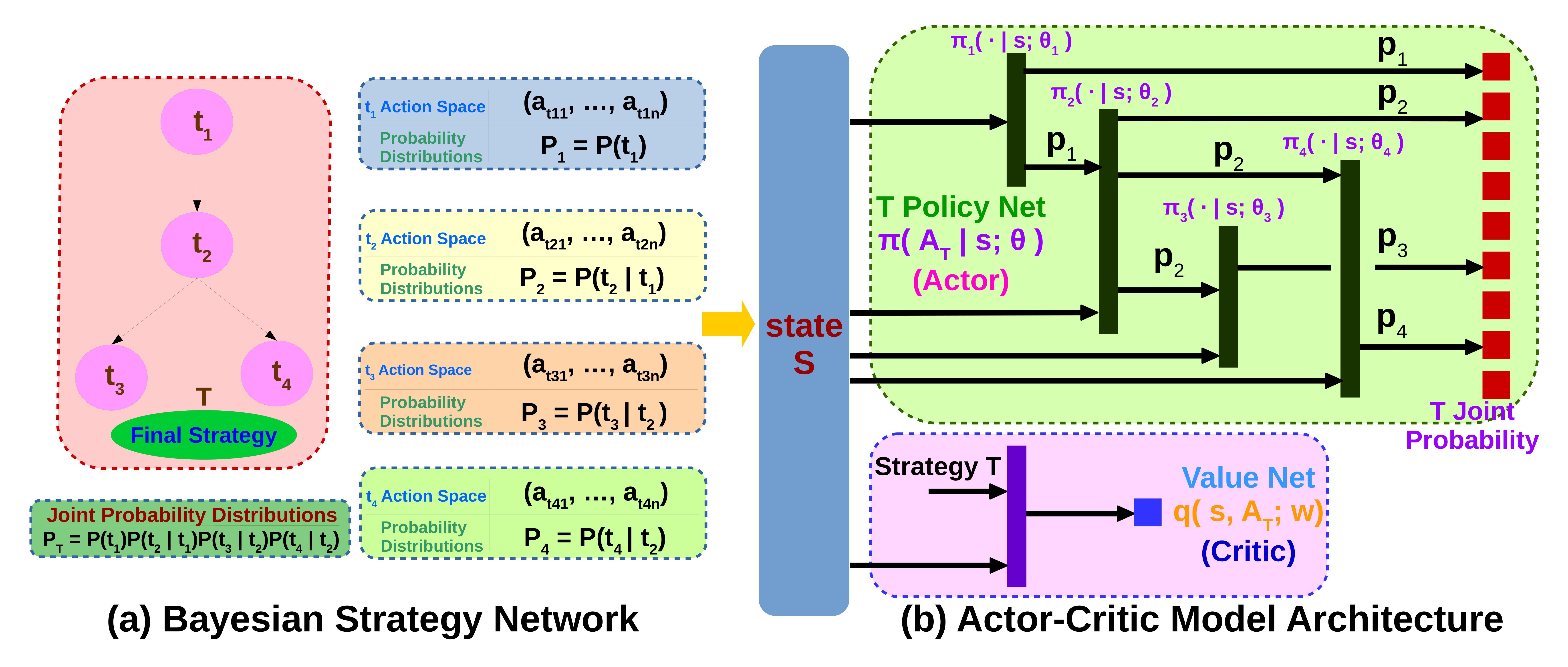

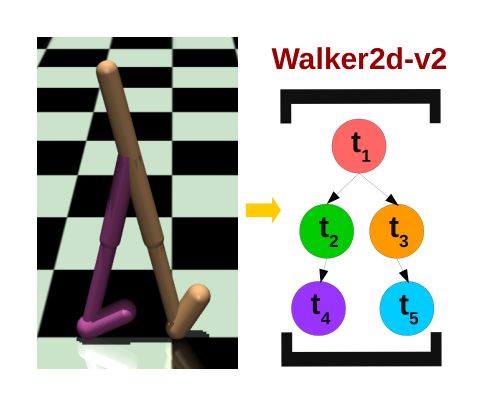

Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system utility, decrease the overall cost, and increase mission success probability. Deep Reinforcement Learning (DRL) helps organize agents' behaviors and actions based on their state and represents complex strategies. This research introduces a novel hierarchical strategy decomposition approach based on Bayesian chaining to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN).

Trust Among Agents

How can robots trust each other for better cooperation? A relative needs entropy based robot-robot trust assessment model.

Cooperation in MAS/MRS can help agents build various formations, shapes, and patterns presenting corresponding functions and purposes adapting to different situations. Relationship between agents such as their spatial proximity and functional similarities could play a crucial role in cooperation between agents. Trust level between agents is an essential factor in evaluating their relationships' reliability and stability, much as people do.

MAS Decision-Making

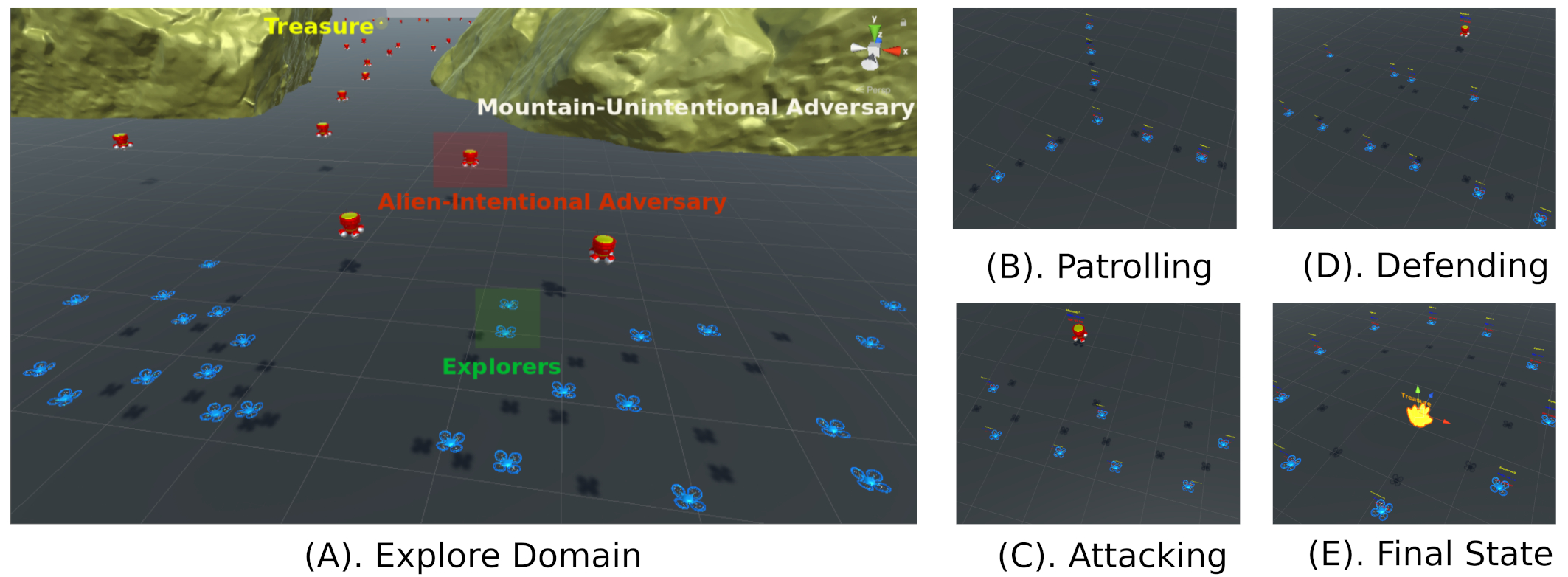

A Hierarchical Game-Theoretic Decision-Making for Cooperative Multi-Agent Systems Under the Presence of Adversarial Agents

The relationships among Multi-agent systems (MAS) working in hazardous and risky scenarios are complex, and game theory provides various methods to model agents' behaviors and strategies. In adversarial environments, the adversary can be regarded as intentional or unintentional based on its needs and motivations. The agent will adopt suitable strategies to maximize the utilities achieving its current needs and minimizing expected costs. This research proposes a new network model called the Game-Theoretic Utility Tree (GUT), combining the core principles of game theory and utility theory to achieve cooperative decision-making for MAS in adversarial environments.

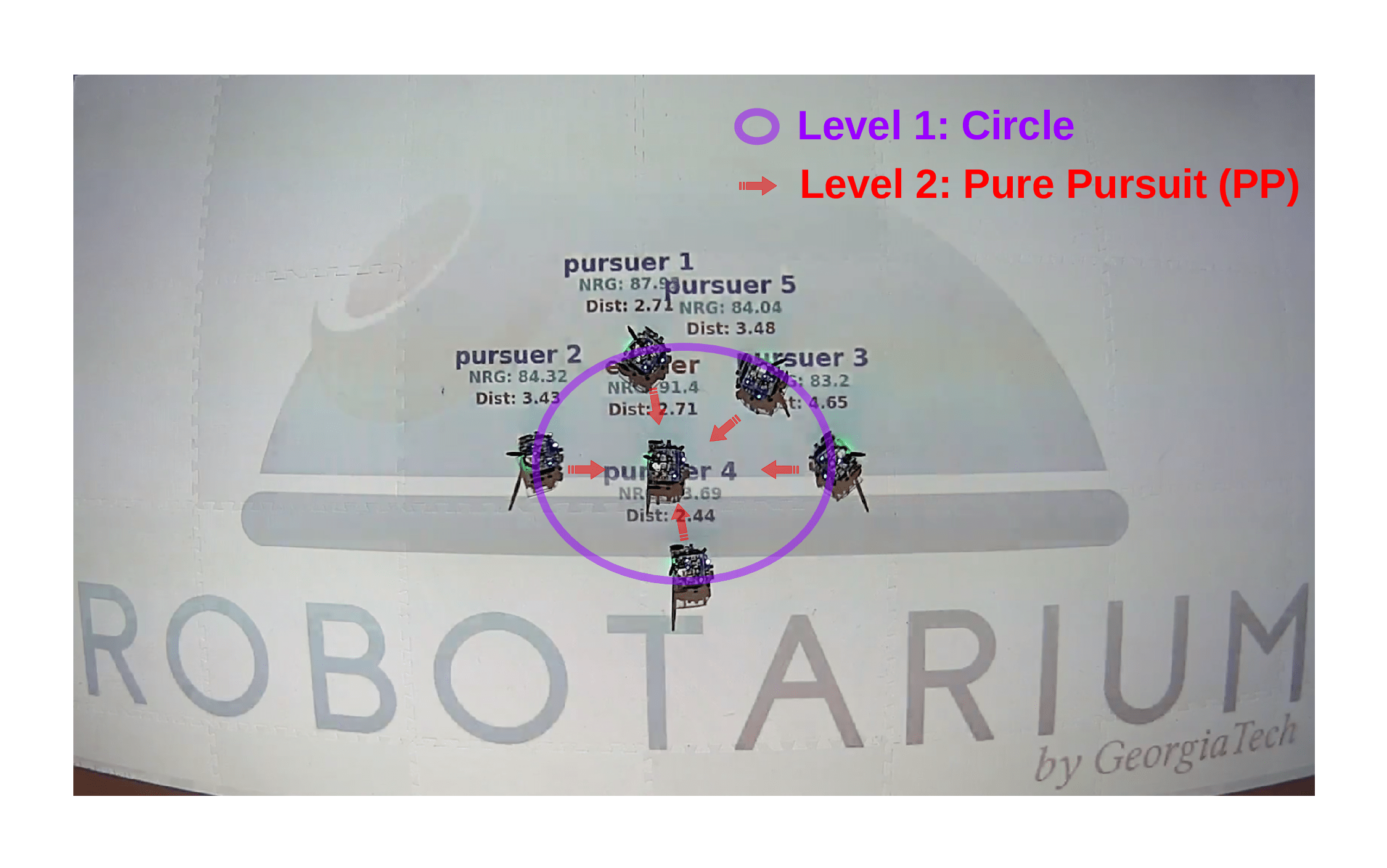

Game-theoretic Utility Tree for Multi-Robot Cooperative Pursuit Strategy

This work extends the GUT in the pursuit domain to achieve MAS cooperative decision-making in catching an evader. We demonstrate the GUT's performance in the real robot implementing the Robotarium platform compared to the conventional constant bearing (CB) and pure pursuit (PP) strategies.

Cooperative MAS Cognitive Modeling

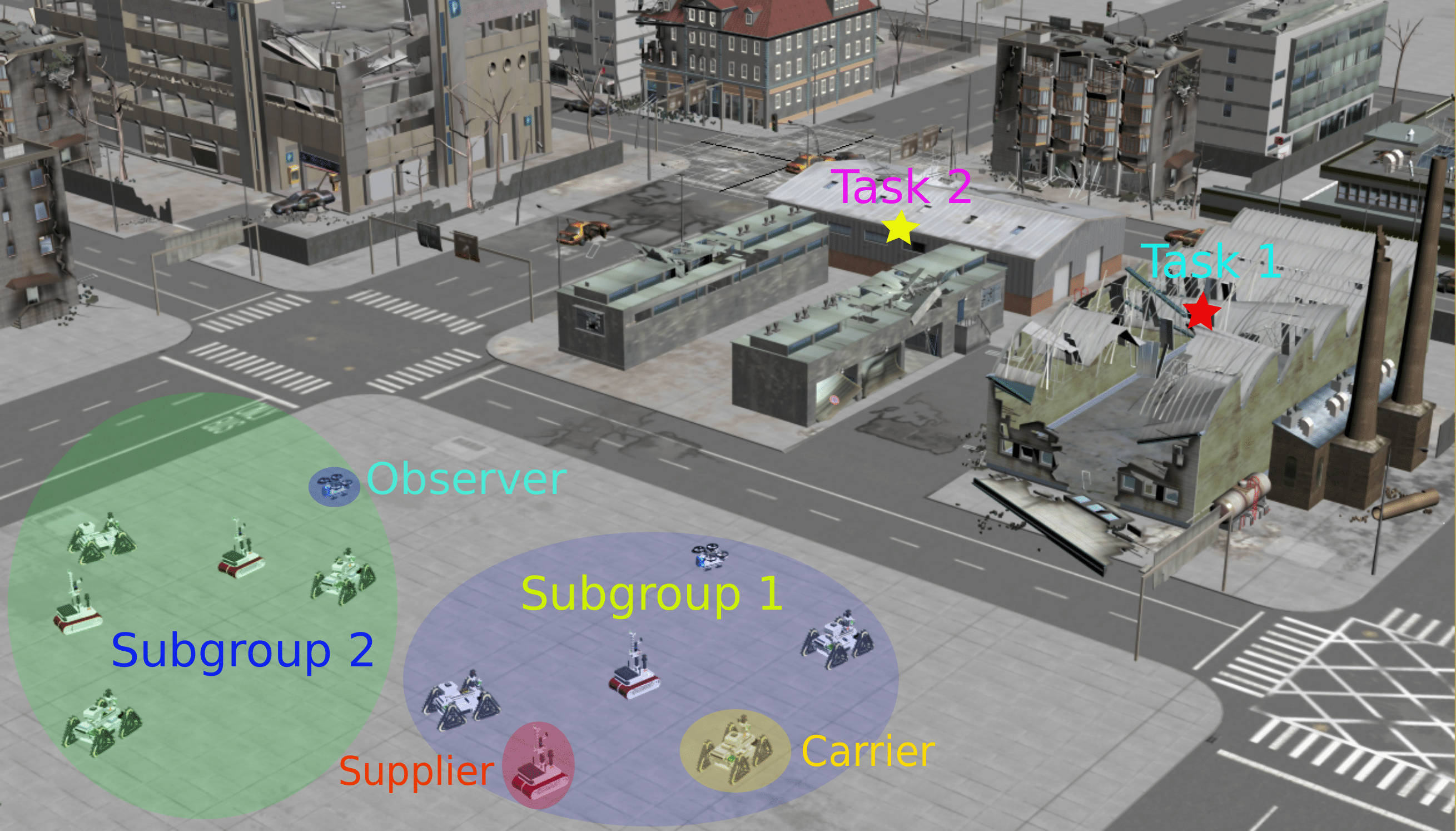

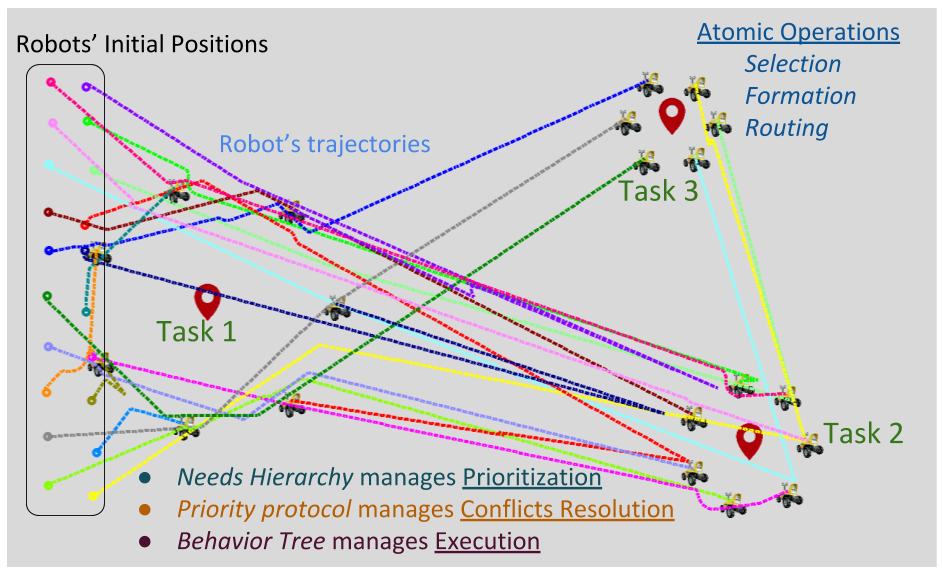

Hierarchical Needs Based Self-Adaptive Framework For Cooperative Multi-Robot System

Natural systems (living beings) and artificial systems (robotic agents) are characterized by apparently complex behaviors that emerge as a result of often nonlinear spatiotemporal interactions among a large number of components at different levels of organization. Simple principles acting at the agent level can result in complex behavior at the global level in a swarm system. Swarm intelligence is the collective behavior of distributed and self-organized systems. Moreover, Multi-Robot systems (MRS) potentially share the properties of swarm intelligence in practical applications such as search, rescue, mining, map construction, exploration.

Intelligent Social Systems and Swarm Robotics Lab (IS3R)

Intelligent Social Systems and Swarm Robotics Lab (IS3R)